Neural Network Jet Dog

A simple game playable with a single button. I then made a neural network from scratch that should learn to master the game.

Key points

- Unity game

- Neural network

- Genetic algorithm

The Mini Game

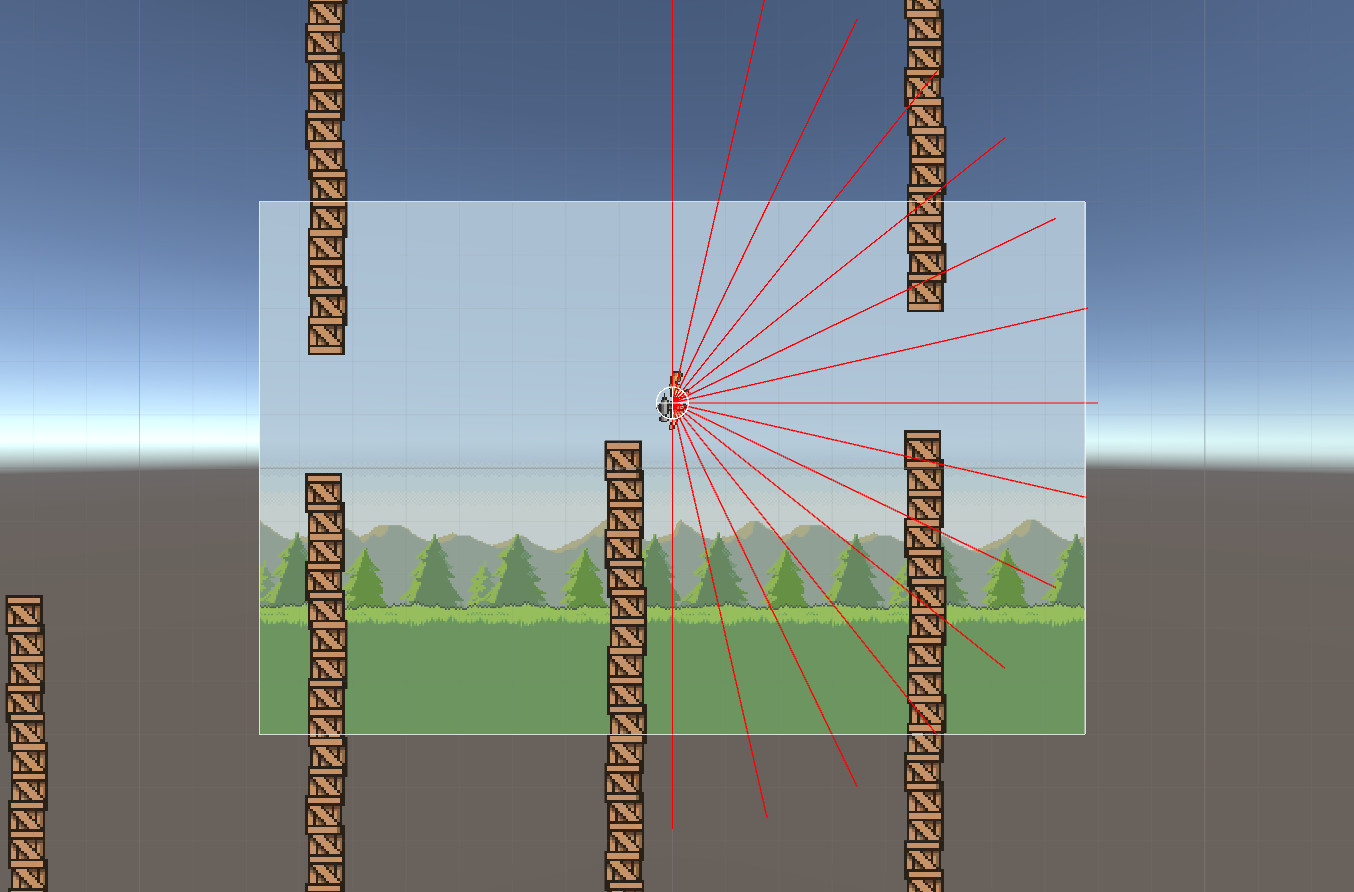

I wanted the game to have a single true or false, to make the output of the network as simple as possible (a single boolean). I made a kind of flappy bird game, except that you hold the input to add more vertical force to the character, instead of tapping to jump. The obstacles are random and different every game.

The game is very easy to make, but I didn't realize that I had to have a kind of speed up process before trying the network on it. In the first version of the game, it was running based on the Unity Update function, which works great for a game made for humans. This game however needs to be playable by the computer as fast as possible, without wasting time updating the sprites on the screen. So I remade the game, this time with my own update function, that waits for the screen to refresh or not, depending on whether a human or the computer is playing.

Neural Network

I made my own neural network based on general explanations of the concept, so by figuring things out by myself I could better understand how it works at its core. The resulting network may not be optimized in any way, but it worked pretty much first try and runs fine.

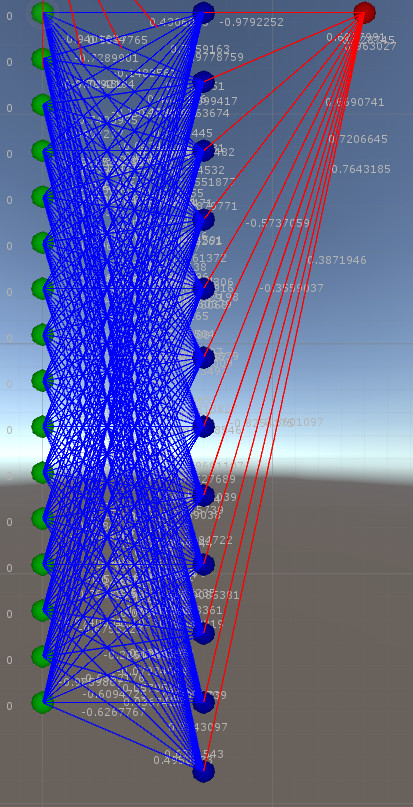

The network can have a variable number of layers and neurons by layer. I used a step as propagation function, since my game is really simple and linear.

Training : Genetic Algorithme

I used a very intuitive genetic algorithme for training. As for the network itself, I tried making my own thing based on my understanding of the concept. I simply applied the natural selection and evolution processes to my network.

The algorithme first starts by making a pool of random individuals (random neurons' weigths). Each individual plays the game and get a score (stay alive time). The individuals that scored the lowest are killed (natural selection). Some of the survivors are mutated at random (some of their weights are modified), then the breeding process replace the deads by new children. To create a child, two random individuals are picked, and each weight of the child is taken from either one of the parents at random. From there it loops back to the beginning and make the individuals play the game again, until one individual reach the target score.

Inputs

To detect the obstacles, the ai takes in inputs a bunch of raycast at different angles in front of it. The value returned by the raycast and taken as input by the ai is the distance of the obstacle in this direction. This way, the ai doesn't "cheat", it doesn't know where the gap in the obstacles is exactly, it can see as a human player would.

Results

It tooks 600 generations on a population of 500 to obtain an individual that could play the game perfectly. That's ~4 hours on my (slow) computer. This could be improved with a better training algorithme, better settings (size of population, mutation rate etc.), a smarter scoring system (when the individuals are getting better at the game, it takes more time for them to loose so it slows down the process), or by running the training on the GPU instead of the CPU.